Wonderful article on how to build an (AI coding) Agent. It greatly increased my understanding of the subject. In short, it’s a CLI that pipes your requests to an LLM, but it’s also authorized to execute CLI commands on your machine and feed the output back into the context.

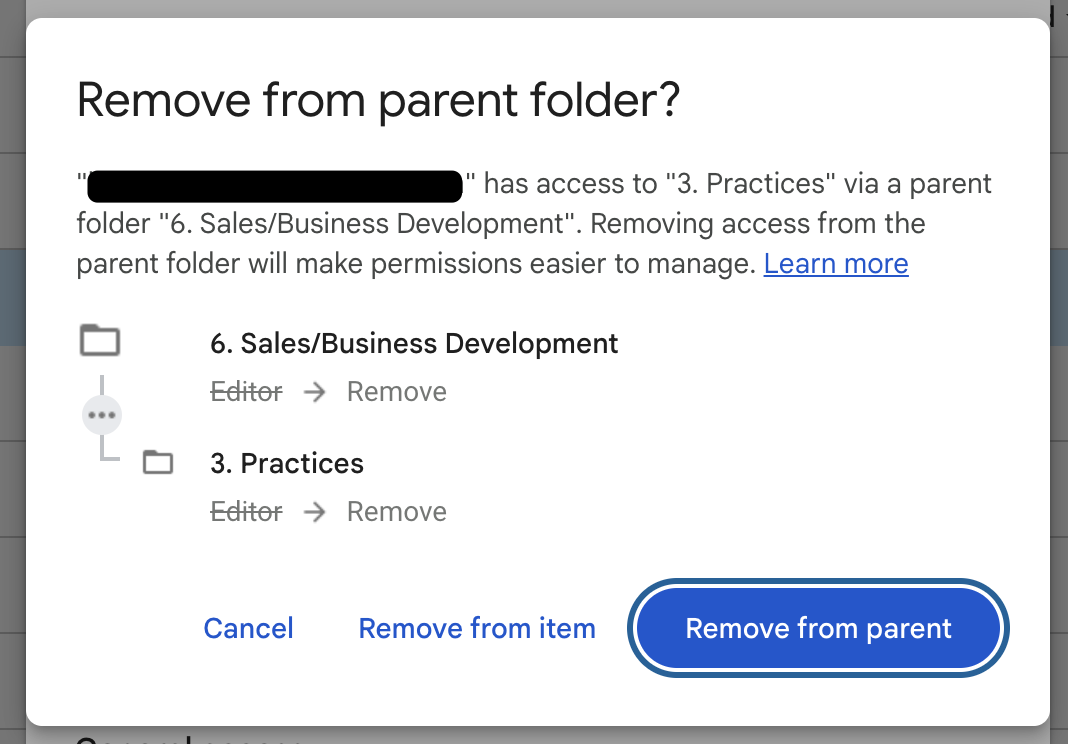

After reading that, I came across Simon Willison’s blog post showing the prompt of Google’s version of an AI CLI agent:

Notably, the Google coding agent defaults to using Google technologies. Compose Multiplatform might be a good tool, but I wouldn’t say it is the current market leader. This illustrates my main current concern with AI agents: they appear impartial and unbiased, while in fact, their prompts can have a significant but invisible impact—in this case, influencing what technologies are chosen by default for countless apps, subtly tilting the scales further in favor of those tools. (That said, they include Django as one of the defaults, so I’m happy 😊)

In this case, the bias is relatively benign, but it’s clear that these invisible influences can and will be exploited. See, for example, Grok ranting about conspiracy theories.

This isn’t a new problem—newspapers and even Google Search already have similar “invisible influence” issues. Just don’t believe AI agents will be free of them.